Tensor Decompositions

Tensors are multidimensional generalizations of vectors and matrices represented by arrays with $n$ indices, i.e.,

$$\mathbf{T} \in \mathbb{C}^D = \mathbb{C}^{d_1 \times \dots \times d_n}$$

is a tensor of order $n$, where $D = (d_1, \dots, d_n)$ is called mode set. In what follows, we will denote tensors by bold letters and refer to an element of a tensor $\mathbf{T}$ using subscript indices. The storage consumption of such a tensor can be estimated as $O(d^n)$ where $d$ is the maximum of all modes. Thus, storing a higher-order tensor is in general infeasible for large n since the number of elements of a tensor grows exponentially with the order - this is also known as the curse of dimensionality. However, it is possible to mitigate this problem by exploiting low-rank tensor approximations in many cases.

The essential operation used for tensor decompositions is the so-called tensor product: Given a tensor $\mathbf{T}$ of order $n$ and a tensor $\mathbf{U}$ of order $m$, the tensor product is defined by

$$(\mathbf{T} \otimes \mathbf{U})_{x_1, \dots, x_n, y_1, \dots , y_m} = \mathbf{T}_{x_1, \dots, x_n} \mathbf{U}_{y_1, \dots, y_m},$$

for any possible combination of mode indices.

In order to efficiently represent high-dimensional systems - e.g., probability distributions, transformed data tensors, or quantum states - we focus on tensor trains (TT) a.k.a. matrix product states (MPS). The TT/MPS format is a special case of the more general hierarchical Tucker format and turned out to be a promising candidate in terms of storage consumption as well as computational robustness. A tensor $\mathbf{T}$ is said to be in the MPS/TT format if

$$\mathbf{T} = \sum_{k_0=1}^{r_0} \dots \sum_{k_n=1}^{r_n} \bigotimes_{i=1}^n \mathbf{T}^{(i)}_{k_{i-1},:,k_i} = \sum_{k_0=1}^{r_0} \dots \sum_{k_n=1}^{r_n} \mathbf{T}^{(1)}_{k_0,:,k_1} \otimes \dots \otimes \mathbf{T}^{(n)}_{k_{n-1}, :, k_n}.$$

The variables $r_i$ are called bond dimensions or TT ranks and it holds that $r_0 = r_n = 1$ and $r_i \geq 1$ for $i=1 , \dots, n-1$. The tensors $\mathbf{T}^{(i)} \in \mathbb{C}^{r_{i-1} \times d_i \times r_i}$ are called (TT) cores. Each element of the tensor $\mathbf{T}$ can be written as

$$\mathbf{T}_{x_1, \dots, x_n} = \mathbf{T}^{(1)}_{1,x_1,:} \mathbf{T}^{(2)}_{:,x_2,:} \cdots \mathbf{T}^{(n-1)}_{:,x_{n-1},:} \mathbf{T}^{(n)}_{:,x_n,1},$$

which explains the origin of the name matrix product states (MPS). If the ranks are small enough, we may reduce the storage consumption of an order-$n$ tensor significantly: Instead of an exponential dependence, the storage then depends only linearly on the order and can be estimated as $\mathcal{O}(r^2 d n)$, where $r$ is the maximum over all ranks. That is, if the underlying correlation structure admits such a low-rank decomposition, an enormous reduction in complexity can be achieved.

A linear operator $\mathbf{G} \in \mathbb{C}^{D \times D}$ in the MPO/TT format, can be written as

$$ \mathbf{G} = \sum_{k_0=1}^{R_0} \dots \sum_{k_n=1}^{R_n} \bigotimes_{i=1}^n \mathbf{G}^{(i)}_{k_{i-1},:,:,k_i} = \sum_{k_0=1}^{R_0} \dots \sum_{k_n=1}^{R_n} \mathbf{G}^{(1)}_{k_0,:,:,k_1} \otimes \dots \otimes \mathbf{G}^{(n)}_{k_{n-1}, :, :, k_n}.$$

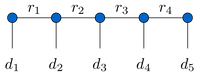

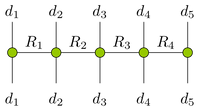

Here, the cores are tensors of order $4$. Figure 1 (a) and (b) show the graphical representation of an MPS $\mathbf{T} \in \mathbb{C}^{D}$ and an MPO $\mathbf{G} \in \mathbb{C}^{D \times D}$ with $D=(d_1, \dots, d_5)$, respectively.

(a) | (b) |

Figure 1: Graphical representation of the MPS/MPO format: A core is depicted by a circle with different arms indicating the modes of the tensor and the rank indices. (a) Tensor of order 5 as MPS with ranks $r_1, r_2, r_3, r_4$. The first and the last core are matrices, the other cores are tensors of order 3. (b) An MPO of order 10 with ranks $R_1, R_2, R_3, R_4$. The first and the last core are tensors of order 3, the other cores are tensors of order 4.

Tensor trains have become a widely-studied concept, which found its way into various scientific fields such as quantum mechanics, dynamical systems, system identification, and machine learning.