Faster Algorithms for Structured Matrix Multiplication via Flip Graph Search

Matrix multiplication is one of the core primitives in numerical linear algebra, but in practical applications we rarely multiply fully general matrices. Instead, we often deal with structured cases such as symmetric, triangular, skew-symmetric, or transpose products like $A A^\top$. These structured variants all share the same asymptotic exponent $\omega$ as general matrix multiplication, but their multiplicative constants can differ significantly. Improving these constants yields real speed-ups in recursively defined algorithms.

In our work [1], we systematically explore structured matrix multiplication for all formats up to size $5\times5$ and present a comprehensive catalogue of fast bilinear algorithms discovered through flip-graph search. Each algorithm corresponds to a low-rank decomposition of the underlying multiplication tensor. For a bilinear map encoded by $T_{ijk}$, a fast algorithm is a decomposition

$$T_{ijk} = \sum_{q=1}^{r} U_{qi} V_{qj} W_{qk},$$which computes the product using only $r$ scalar multiplications. Reducing $r$ is the core goal, and classical examples such as the $\langle 2,2,2:7\rangle$ Strassen scheme illustrate how powerful such reductions can be.

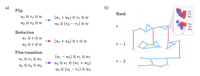

To navigate the enormous search space of possible decompositions, we employ flip-graph search. In this graph, each node is a valid sum of rank-1 tensors, and edges correspond to local transformations such as flips, reductions, or plus-transitions, all of which preserve correctness. Random walks on this graph allow us to efficiently discover low-rank schemes even for nontrivial structured multiplication tensors. A conceptual illustration is shown here:

Figure 1: Flip graph structure and operations. a) Types of transformations between tensor decompositions. b) The flip graph organizes schemes by rank. Horizontal edges (blue) correspond to flips within a fixed rank level. Vertical edges (red) correspond to reductions that decrease rank.

A key feature of our approach is that we operate over finite fields $\mathbb{F}_2$ and $\mathbb{F}_3$, where tensor operations are extremely fast. Many good schemes arise in $\mathbb{F}_2$, but some of the best ones fundamentally require the inverse of $2$ and therefore only appear in $\mathbb{F}_3$. When possible, we lift finite-field solutions to integer or rational schemes through Hensel lifting, producing algorithms suitable for real or complex arithmetic.

To classify all relevant structured formats, we use the symbols $g$ (general), $u$ (upper-triangular), $l$ (lower-triangular), $s$ (symmetric), $k$ (skew-symmetric), and $t$ (transpose). Accounting for identities such as $C^\top = B^\top A^\top$ and symmetry between upper and lower structures yields 15 distinct formats. For recursion we additionally introduce the auxiliary structure $w$ (skew-symmetric with free diagonal), which is necessary for non-commutative block structures.

Across all these formats, we compute asymptotic multiplicative constants $\gamma_{ab}$ by analyzing how recursive calls preserve or change structure. These constants derive from $$\gamma_{ab}=\frac{r - q_{ab} - q_{ag}(1 - \gamma_{ag}) - q_{gb}(1 - \gamma_{gb})}{k^{\omega} - q_{ab}},$$where $q_{ab}$, $q_{ag}$, and $q_{gb}$ count structure-preserving block multiplications. Our results improve the best-known constants in 13 out of 15 structured settings. Examples include the improved transpose-product constant $\gamma_{gt} = 8/13$ and a new rank-14 scheme for skew-symmetric–general multiplication $kg$, surpassing the previously known rank 15.

One of the most notable outputs is our $\langle 4,4,4:34\rangle_{gt}$ scheme for computing $A A^\top$, which requires 10 recursive calls and 24 general multiplications. This provides a compact and highly structured algorithm for producing all upper-triangular blocks of the result matrix. A high-level visualization of such a structured decomposition is shown below.

Figure 2: Structured matrix multiplication $C = AA^T$ for a 4x4 block with symmetric $C$. Coefficients lie in $\mathbb{Z}$. Operation count: 34 multiplications (10 $\texttt{gt}$ + 24 $\texttt{gg}$) and 188 additions.

Several of the discovered schemes, especially for symmetric and skew-symmetric formats, reveal structure that cannot occur in simple tensor factorizations. Many of them exhibit intrinsic non-commutativity that emerges only in recursive block form, reinforcing the importance of working with true multiplication tensors rather than naïvely symmetrized approximations.

Altogether, our exploration required on the order of 1000 CPU-days across all structured formats, though many individual schemes were found in minutes. The combination of flip-graph navigation, finite-field exploration, and systematic lifting yields an effective and scalable pipeline for discovering new fast algorithms. We summarize these findings in a complete catalogue, along with explicit decompositions for all improved formats.

We see this work as a step toward establishing flip-graph search as a robust and efficient tool for algorithm discovery in bilinear algebra. The same principles extend naturally beyond matrix multiplication to polynomial multiplication, convolution-type bilinear maps, and even more exotic structures such as quaternionic or octonionic products. By charting the structured landscape at small base sizes, we create a foundation for future recursive schemes and for hybrid approaches that combine guided search with machine learning within the space of correct tensor decompositions.

[1] K. Khoruzhii, P. Gelß, S. Pokutta. Faster Algorithms for Structured Matrix Multiplication via Flip Graph Search. submitted. arXiv: 2511.10786